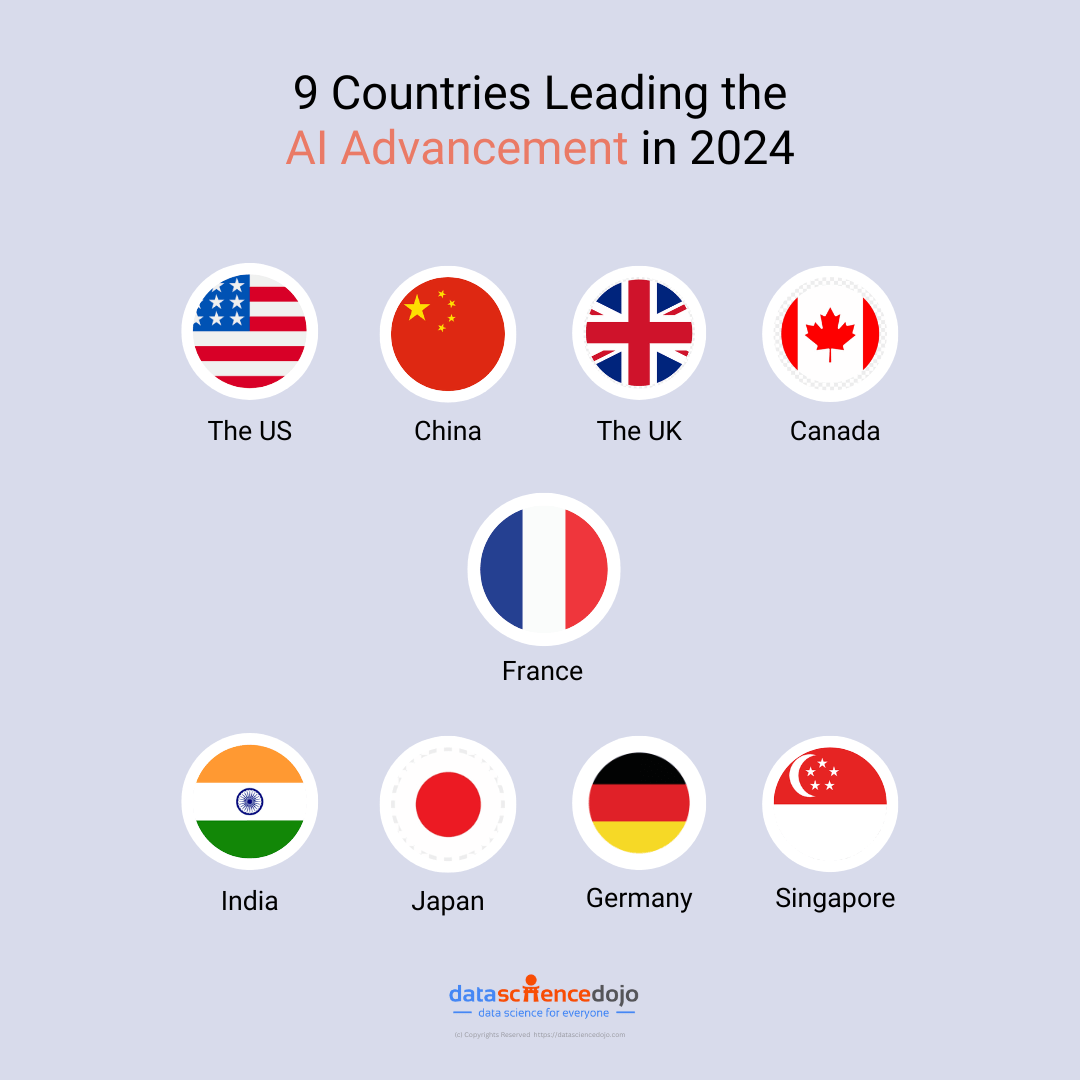

In the rapidly growing digital world, AI advancement is driving the transformation toward improved automation, better personalization, and smarter devices. In this evolving AI landscape, every country is striving to make the next big breakthrough.

In this blog, we will explore the global progress of artificial intelligence, highlighting the leading countries of AI advancement in 2024.

Top 9 countries leading AI development in 2024

Let’s look at the leading 9 countries that are a hub for AI advancement in 2024, exploring their contribution and efforts to excel in the digital world.

The United States of America

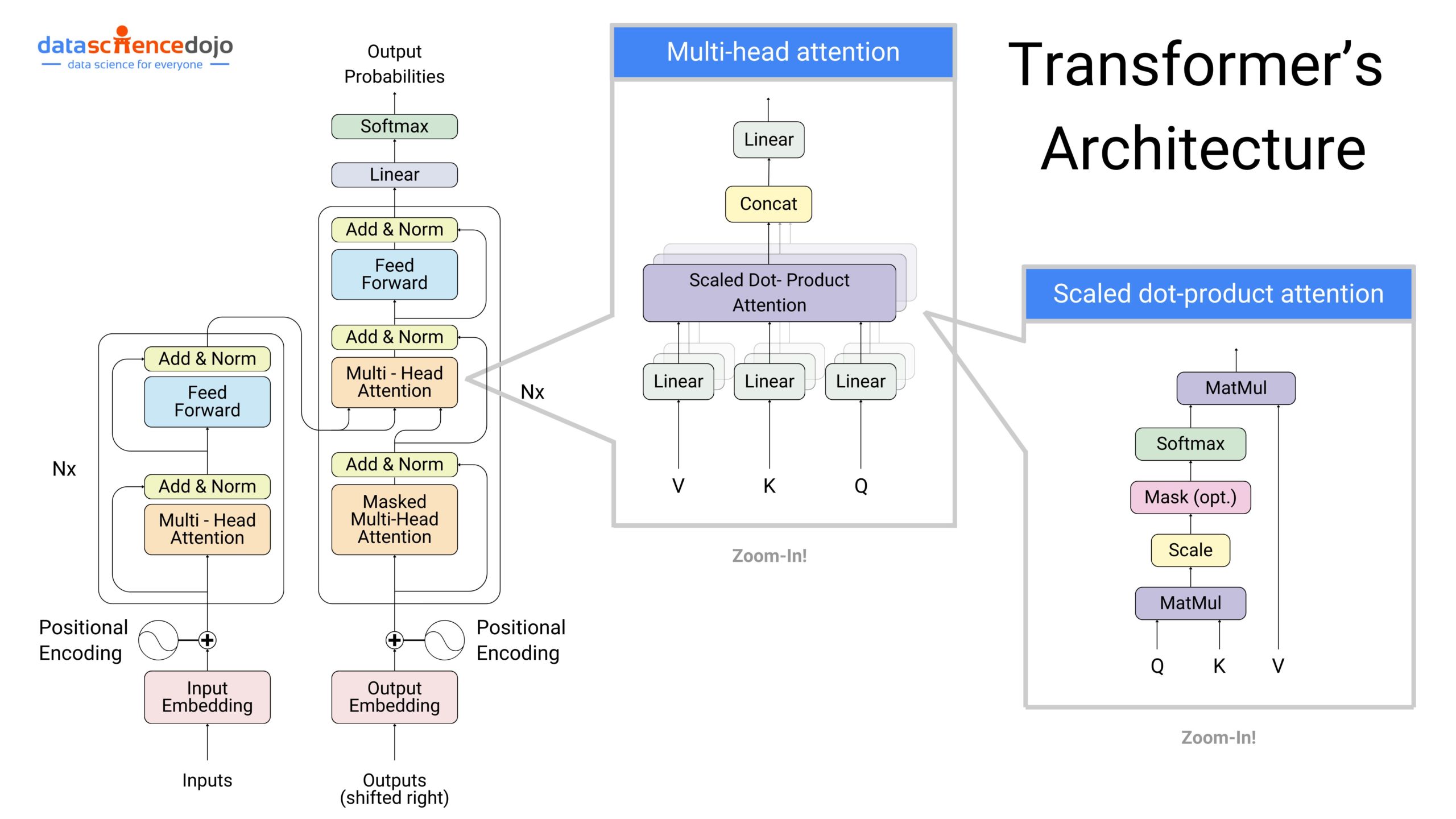

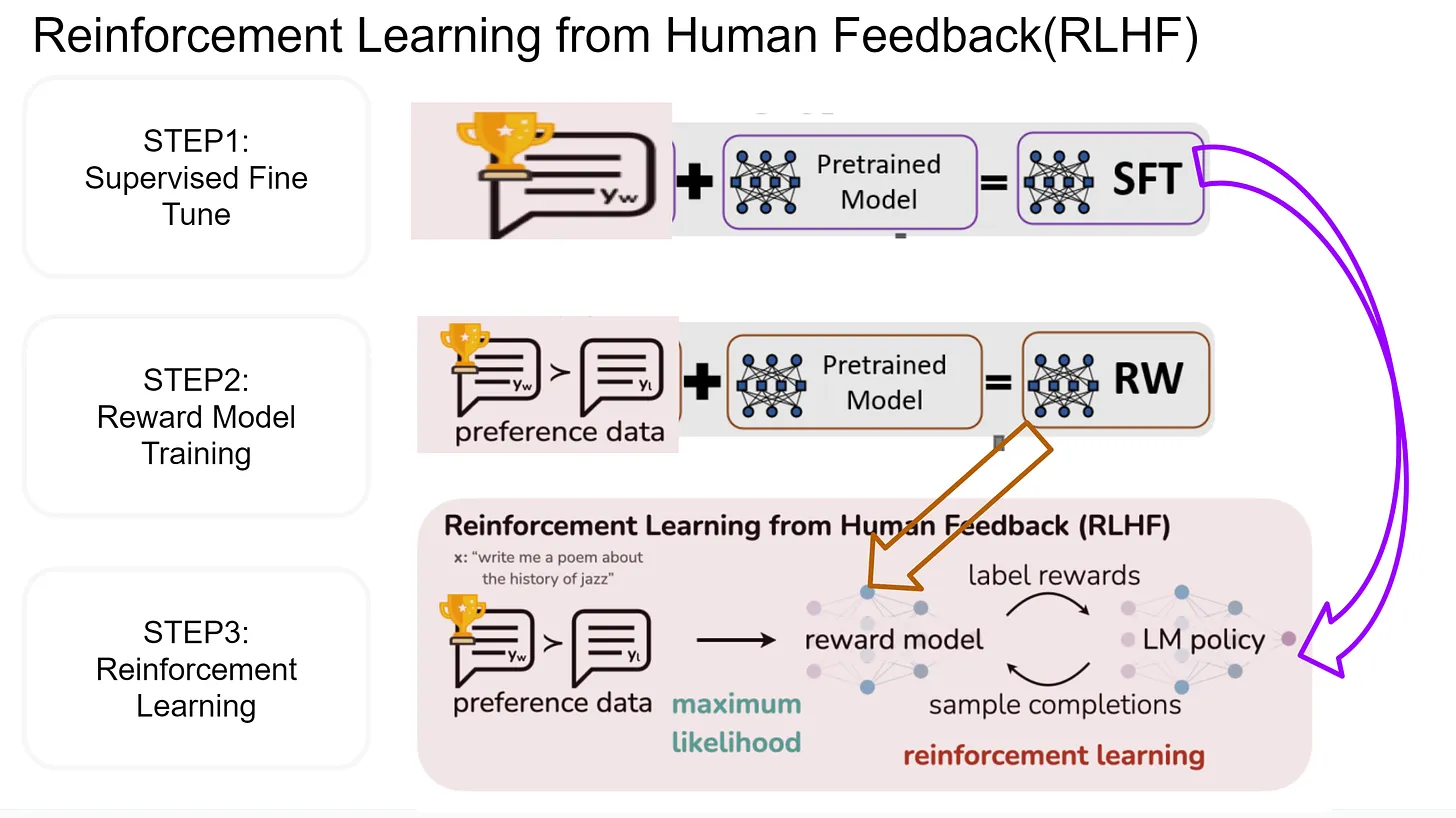

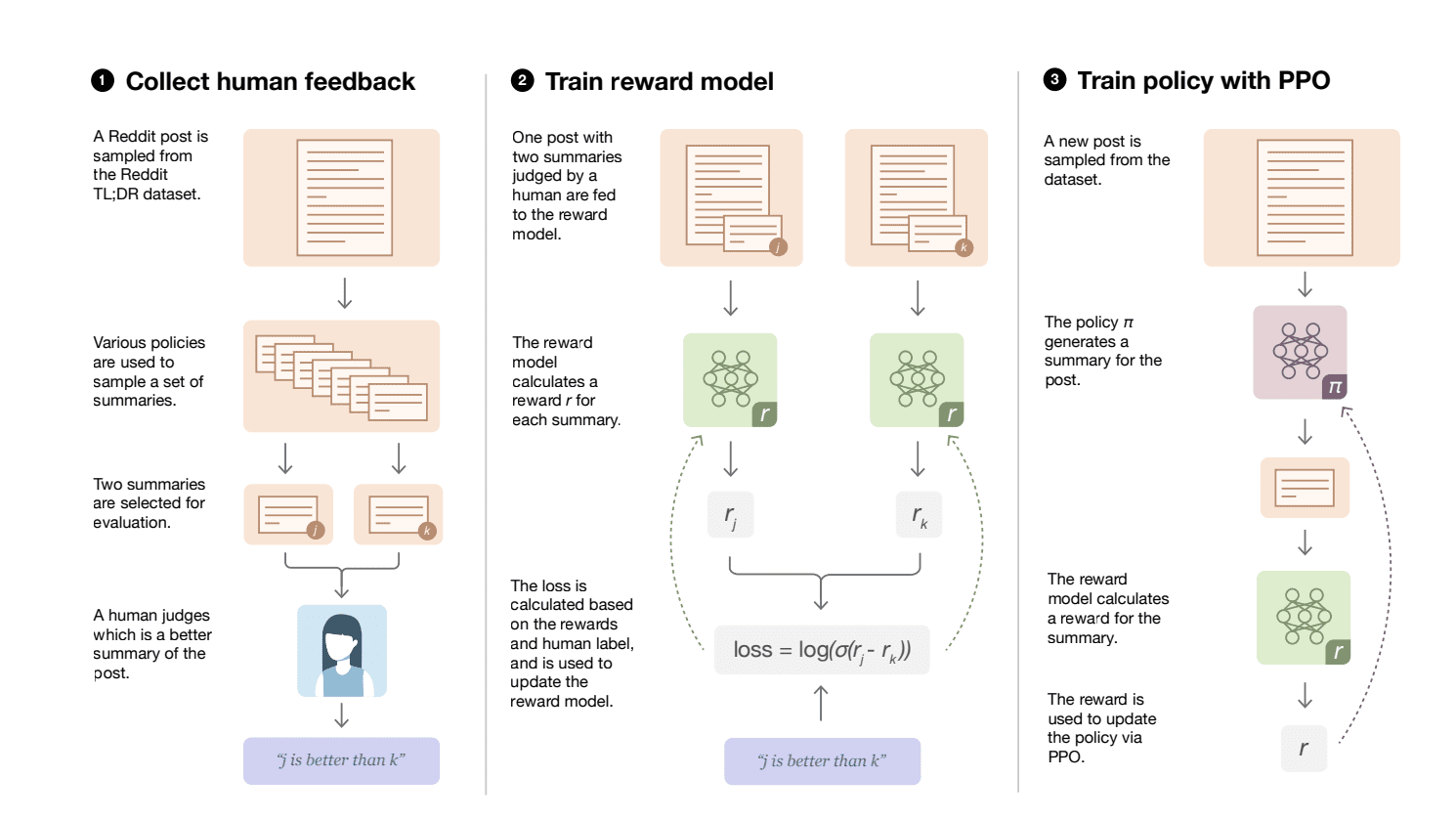

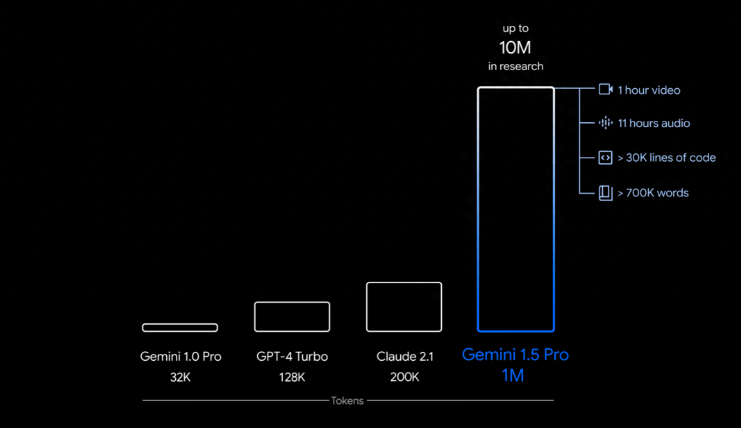

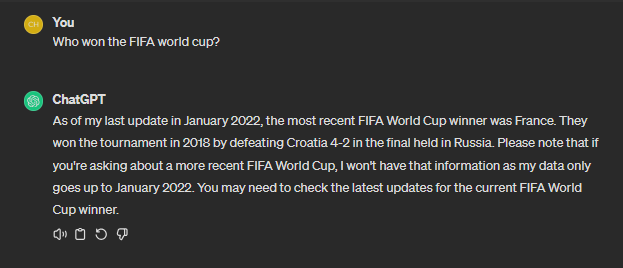

Providing a home to the leading tech giants, including OpenAI, Google, and Meta, the United States has been leading the global AI race. The contribution of these companies in the form of GPT-4, Llama 2, Bard, and other AI-powered tools, has led to transformational changes in the world of generative AI.

The US continues to hold its leading position in AI advancement in 2024 with its high concentration of top-tier AI researchers fueled by the tech giants operating from Silicon Valley. Moreover, government support and initiative fosters collaboration, promising the progress of AI in the future.

The recent development of the Biden administration focused on ethical considerations for AI is another proactive approach by the US to ensure suitable regulation of AI advancement. This focus on responsible AI development can be seen as a positive step for the future.

Explore the key trends of AI in digital marketing in 2024

China

The next leading player in line is China powered by companies like Tencent, Huawei, and Baidu. The new releases, including Tencent’s Hunyuan’s large language model and Huawei’s Pangu, are guiding the country’s AI advancements.

Strategic focus on specific research areas in AI, government funding, and a large population providing a massive database are some of the favorable features that promote the technological development of China in 2024.

Moreover, China is known for its rapid commercialization, bringing AI products rapidly to the market. A subsequent benefit of it is the quick collection of real-world data and user feedback, ensuring further refinement of AI technologies. Thus, making China favorable to make significant strides in the field of AI in 2024.

The United Kingdom

The UK remains a significant contributor to the global AI race, boasting different avenues for AI advancement, including DeepMind – an AI development lab. Moreover, it hosts world-class universities like Oxford, Cambridge, and Imperial College London which are at the forefront of AI research.

The government also promotes AI advancement through investment and incentives, fostering a startup culture in the UK. It has also led to the development of AI companies like Darktrace and BenevolentAI supported by an ecosystem that provides access to funding, talent, and research infrastructure.

Thus, the government’s commitment and focus on responsible AI along with its strong research tradition, promises a growing future for AI advancement.

Canada

With top AI-powered companies like Cohere, Scale AI, and Coveo operating from the country, Canada has emerged as a leading player in the world of AI advancement. The government’s focus on initiatives like the Pan-Canadian Artificial Intelligence Strategy has also boosted AI development in the country.

Moreover, the development of research hubs and top AI talent in institutes like the Montreal Institute for Learning Algorithms (MILA) and the Alberta Machine Intelligence Institute (AMII) promotes an environment of development and innovation. It has also led to collaborations between academia and industry to accelerate AI advancement.

Canada is being strategic about its AI development, focusing on sectors where it has existing strengths, including healthcare, natural resource management, and sustainable development. Thus, Canada’s unique combination of strong research capabilities, ethical focus, and collaborative environment positions it as a prominent player in the global AI race.

France

While not at the top like the US or China, France is definitely leading the AI research in the European Union region. Its strong academic base has led to the development of research institutes like Inria and the 3IA Institutes, prioritizing long-term advancements in the field of AI.

The French government also actively supports research in AI, promoting the growth of innovative AI startups like Criteo (advertising) and Owkin (healthcare). Hence, the country plays a leading role in focusing on fundamental research alongside practical applications, giving France a significant advantage in the long run.

India

India is quietly emerging as a significant player in AI research and technology as the Indian government pours resources into initiatives like ‘India AI’, fostering a skilled workforce through education programs. This is fueling a vibrant startup landscape where homegrown companies like SigTuple are developing innovative AI solutions.

What truly sets India apart is its focus on social impact as it focuses on using AI to tackle challenges like healthcare access in rural areas and improve agricultural productivity. India also recognizes the importance of ethical AI development, addressing potential biases to ensure the responsible use of this powerful technology.

Hence, the focus on talent, social good, and responsible innovation makes India a promising contributor to the world of AI advancement in 2024.

Learn more about the top AI skills and jobs in 2024

Japan

With an aging population and strict immigration laws, Japanese companies have become champions of automation. It has resulted in the country developing solutions with real-world AI implementation, making it a leading contributor to the field.

While they are heavily invested in AI that can streamline processes and boost efficiency, their approach goes beyond just getting things done. Japan is also focused on collaboration between research institutions, universities, and businesses, prioritizing safety, with regulations and institutes dedicated to ensuring trustworthy AI.

Moreover, the country is a robotics powerhouse, integrating AI to create next-gen robots that work seamlessly alongside humans. So, while Japan might not be the first with every breakthrough, they are surely leading the way in making AI practical, safe, and collaborative.

Germany

Germans are at the forefront of a new industrial revolution in 2024 with Industry 4.0. Tech giants like Siemens and Bosch using AI are using AI to supercharge factories with intelligent robots, optimized production lines, and smart logistics systems.

The government also promotes AI advancement through funding for collaborations, especially between academia and industry. The focus on AI development has also led to the initiation of startups like Volocopter, Aleph Alpha, DeepL, and Parloa.

However, the development is also focused on the ethical aspects of AI, addressing potential biases on the technology. Thus, Germany’s focus on practical applications, responsible development, and Industry 4.0 makes it a true leader in this exciting new era.

Singapore

The country has made it onto the global map of AI advancement with its strategic approach towards research in the field. The government welcomes international researchers to contribute to their AI development. It has resulted in big names like Google setting up shop there, promoting open collaboration using cutting-edge open-source AI tools.

Some of its notable startups include Biofourmis, Near, Active.Ai, and Osome. Moreover, Singapore leverages AI for applications beyond the tech race. Their ‘Smart Nation’ uses AI for efficient urban planning and improved public services.

In addition to this, with its focus on social challenges and focusing on the ethical use of AI, Singapore has a versatile approach to AI advancement. It makes the country a promising contender to become a leader in AI development in the years to come.

The future of AI advancement

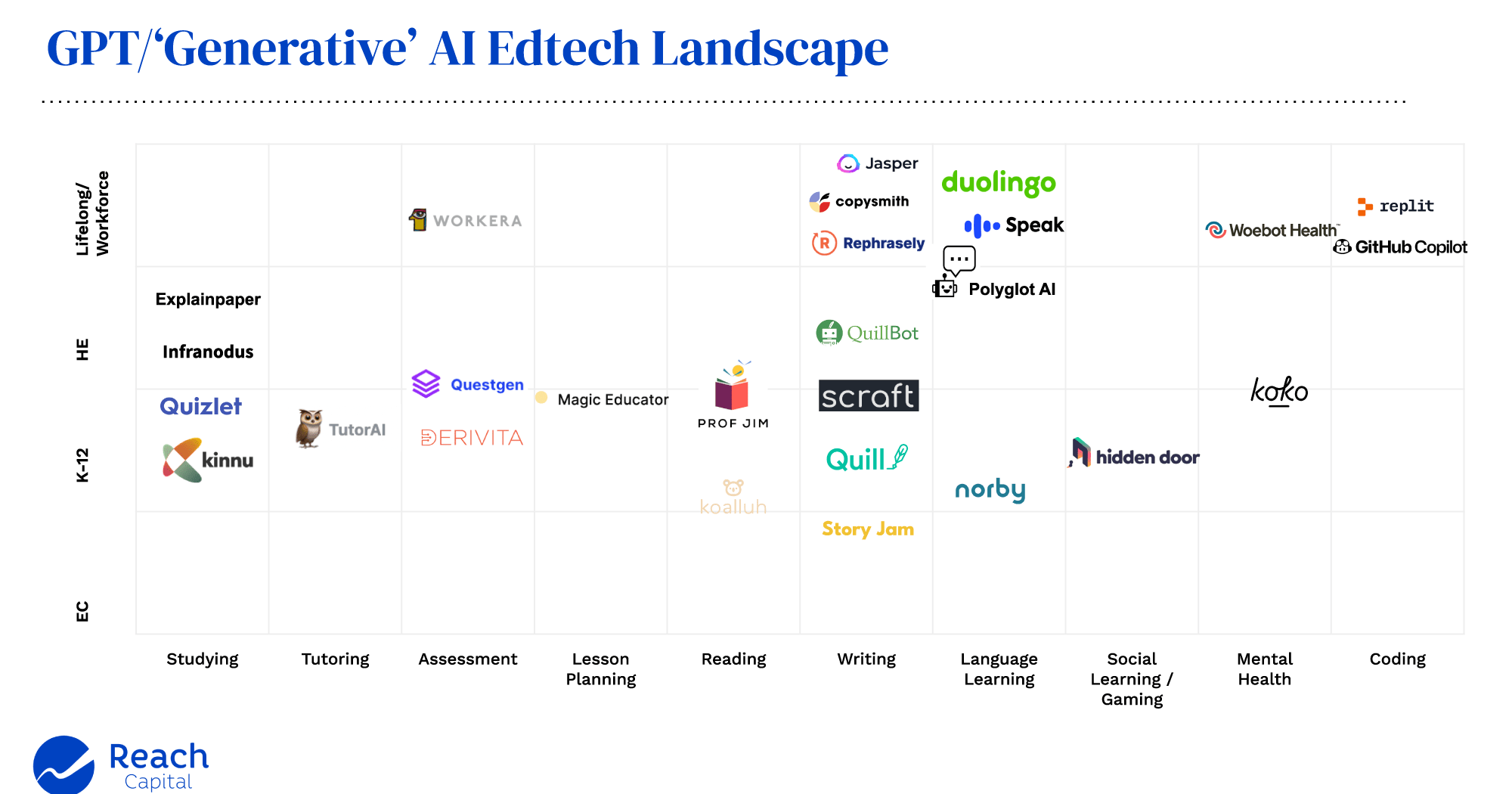

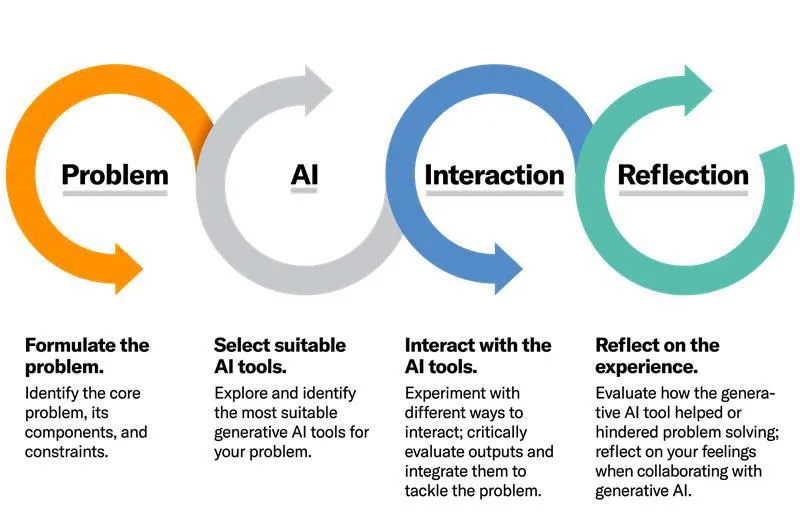

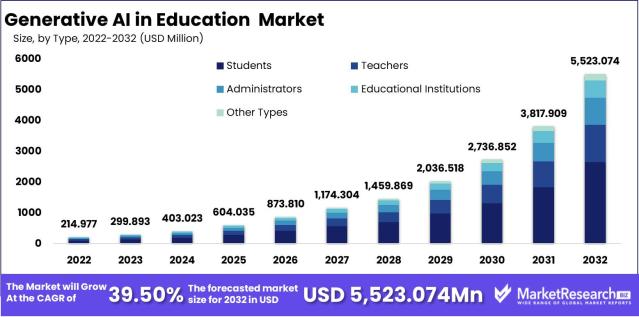

The versatility of AI tools promises a future for the field in all kinds of fields. From personalizing education to aiding scientific discoveries, we can expect AI to play a crucial role in all departments. Moreover, the focus of the leading nations on the ethical impacts of AI ensures an increased aim toward responsible development.

Hence, it is clear that the rise of AI is inevitable. The worldwide focus on AI advancement creates an environment that promotes international collaboration and democratization of AI tools. Thus, leading to greater innovation and better accessibility for all.